Tech

From Siri to Chatbots – How Natural Language Processing is Transforming AI Assistants

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that focuses on the interaction between humans and computers through natural language.

Over the years, NLP has played a crucial role in transforming AI assistants, from the early days of Siri to the advanced chatbots we see today.

From its early beginnings to the present day, NLP has undergone significant advancements, revolutionising the way we interact with technology.

Early Origins of NLP

The roots of NLP can be traced back to the 1950s when the field of artificial intelligence (AI) first emerged.

Early researchers were intrigued by the possibility of machines understanding and processing natural language, just like humans.

The goal was to bridge the gap between human communication and machine comprehension.

Pioneer contributors to NLP

One of the pioneering contributors to NLP was the mathematician and computer scientist, Alan Turing. Turing proposed the “Turing Test” in the 1950s to assess if a machine could show human-like intelligence.

Throughout the 1960s and 1970s, NLP researchers developed various methods and techniques, including machine translation systems and rule-based systems, to understand and generate human language.

Although these early attempts were promising, practical applications of NLP were still limited.

The Rise of Statistical NLP

In the 1980s, a shift occurred in the field of NLP with the introduction of statistical models and machine learning techniques.

Researchers began to collect vast amounts of linguistic data and use probabilistic algorithms to analyse language patterns.

This approach allowed NLP systems to improve their accuracy and handle a wider range of linguistic tasks.

One notable milestone during this period was the development of Hidden Markov Models (HMMs) for speech recognition.

HMMs became a fundamental tool in NLP, enabling the advancement of voice-controlled systems such as automated attendants and voice assistants.

Modern Breakthroughs and Deep Learning

The 21st century witnessed significant breakthroughs in NLP, thanks to the rise of deep learning techniques and the accessibility of big data.

Deep learning algorithms, like neural networks, have made it possible for NLP models to learn from large amounts of text data. This has helped them improve their understanding of context and generate responses that are more like human responses.

One groundbreaking moment came in 2013 when a deep learning model called Word2Vec was introduced.

Word2Vec used neural networks to learn word representations and capture semantic relationships between words.

This innovation revolutionised the way NLP algorithms processed language and demonstrated the power of distributed word embeddings.

Another notable breakthrough was the introduction of Transformers, a deep learning architecture that quickly became a cornerstone of modern NLP models.

Transformers, with their attention mechanisms, revolutionised language understanding tasks, enabling advancements in machine translation, sentiment analysis, and question-answering systems.

The Impact on AI Assistants

The evolution of NLP has had a profound impact on AI assistants such as Siri, Alexa, and Google Assistant.

These intelligent virtual assistants have become indispensable in our daily lives, providing personalised recommendations, answering queries, and even engaging in natural conversations.

With the advancements in NLP, AI assistants can now understand and interpret user input with impressive accuracy.

They can extract relevant information, perform complex language processing tasks, and provide meaningful responses in real-time.

The future of NLP and AI assistants holds even more promise.

Ongoing research is focused on improving how AI assistants understand context, emotions, and user behaviour. This will make them more intuitive and empathetic when interacting with users.

NLP has come a long way since its early days, transforming the field of AI and revolutionising how we communicate with machines.

As NLP continues to evolve, we can expect even more exciting developments that will drive the next generation of AI assistants.

NLP and Siri

Natural Language Processing (NLP) plays a central role in the functionality of Siri, Apple’s virtual assistant.

When Siri was first introduced in 2011, its NLP capabilities were relatively basic. It could understand simple commands and answer a limited range of questions.

However, as technology has advanced, so has Siri’s NLP capabilities.

Today, Siri processes complex language structures, recognises context, and even handles ambiguous queries.

Sophisticated NLP models, like deep learning neural networks, can accurately understand what users mean by training on large amounts of language data.

Rather than requiring users to use specific keywords or phrases, Siri understands and responds to queries in a conversational manner.

This means that users can ask Siri questions or issue commands in a way that feels natural and intuitive.

Siri’s NLP capabilities extend far beyond mere comprehension, as it possesses the ability to truly understand context.

Siri understands that when you ask who the President of the United States is and then ask how tall he is, “he” refers to the President.

This context-awareness allows Siri to provide more accurate and relevant responses.

Over time, Siri’s NLP capabilities have evolved to provide more personalised responses.

Siri learns from user interactions and adapts its responses to better suit individual preferences.

If a user frequently asks Siri for restaurant recommendations, Siri can learn the user’s dining preferences and suggest relevant options based on their previous interactions.

Language Models

Another significant advancement in NLP is the development of powerful language models, such as OpenAI’s GPT-3 (Generative Pre-trained Transformer 3).

These models are pre-trained on vast amounts of text data and can generate coherent, and contextually relevant text based on a given prompt.

Language models like GPT-3 have the potential to revolutionise how AI assistants interact with users.

AI assistants can generate human-like responses, have conversations, and write articles on specific topics. This opens new possibilities for creating more engaging and interactive experiences for users.

Transfer Learning and Fine-tuning

Transfer learning has emerged as a powerful technique in NLP, enabling models trained on one task to be applied to another related task.

This approach allows AI assistants to leverage pre-trained models and adapt them to specific applications or domains.

By fine-tuning pre-trained models, AI assistants can quickly learn and adapt to new tasks or domains with minimal additional training data.

This reduces the time and resources required to develop AI assistants for specific purposes, making them more accessible and cost-effective.

Conversational AI and Dialogue Systems

AI assistants can now engage in more natural and human-like conversations, understand complex queries, and provide contextually relevant responses.

NLP-powered dialogue systems can handle multi-turn conversations and keep track of context. They can generate personalised responses that consider user preferences and intentions. This makes AI assistants more useful and effective by providing tailored experiences.

NLP Challenges

NLP has made great progress in recent years.

It has achieved impressive results in tasks like machine translation, sentiment analysis, and question answering.

However, there are still challenges that researchers and practitioners need to overcome to further improve NLP systems.

Understanding Context

One of the major challenges in NLP is understanding the context of a given text.

Language is inherently ambiguous, and the meaning of a word or phrase can vary depending on the surrounding context.

For example, the word “bank” can refer to a financial institution or the edge of a river.

Resolving such ambiguities requires sophisticated models that can consider the larger context and make accurate predictions.

Another aspect of context understanding is capturing the implied meaning or sentiment of a sentence.

Sarcasm, irony, and other forms of figurative language pose significant challenges for NLP systems.

Identifying and interpreting these nuances requires a deep understanding of the underlying cultural and social contexts, making it a complex task for machines.

Data Limitations

NLP models heavily rely on large amounts of labelled data for training.

However, obtaining annotated data is often time-consuming, expensive, and may suffer from biases.

Moreover, in domains with limited resources or specific languages, the availability of labelled data is even more restricted.

This data scarcity hampers the development of effective NLP systems for such areas.

Domain-specific datasets

NLP models trained on general-purpose datasets may struggle to perform well on domain-specific texts or specialised tasks.

Building domain-specific datasets is not always feasible, making the transferability of models a crucial challenge in NLP research.

Future Directions

Despite these challenges, the future of NLP looks promising.

Here, we discuss some of the exciting research directions that could shape the field in the coming years.

Contextual Understanding

Improving the contextual understanding capabilities of NLP systems, researchers are exploring advanced models such as transformer-based architectures.

These models can capture long-range dependencies and better understand the relationships between words and phrases.

Incorporating world knowledge and leveraging pre-trained contextual embeddings are also promising approaches to enhance context understanding.

Multi-Lingual and Cross-Lingual NLP

In today’s globalised world, NLP systems should be capable of handling multiple languages and transferring knowledge across them.

Cross-lingual models that can generalise across languages have gained significant attention. These models can learn common representations across different languages, facilitating tasks such as machine translation, cross-lingual information retrieval, and zero-shot learning.

Tech

What Is Copilot? Microsoft’s AI Assistant Explained

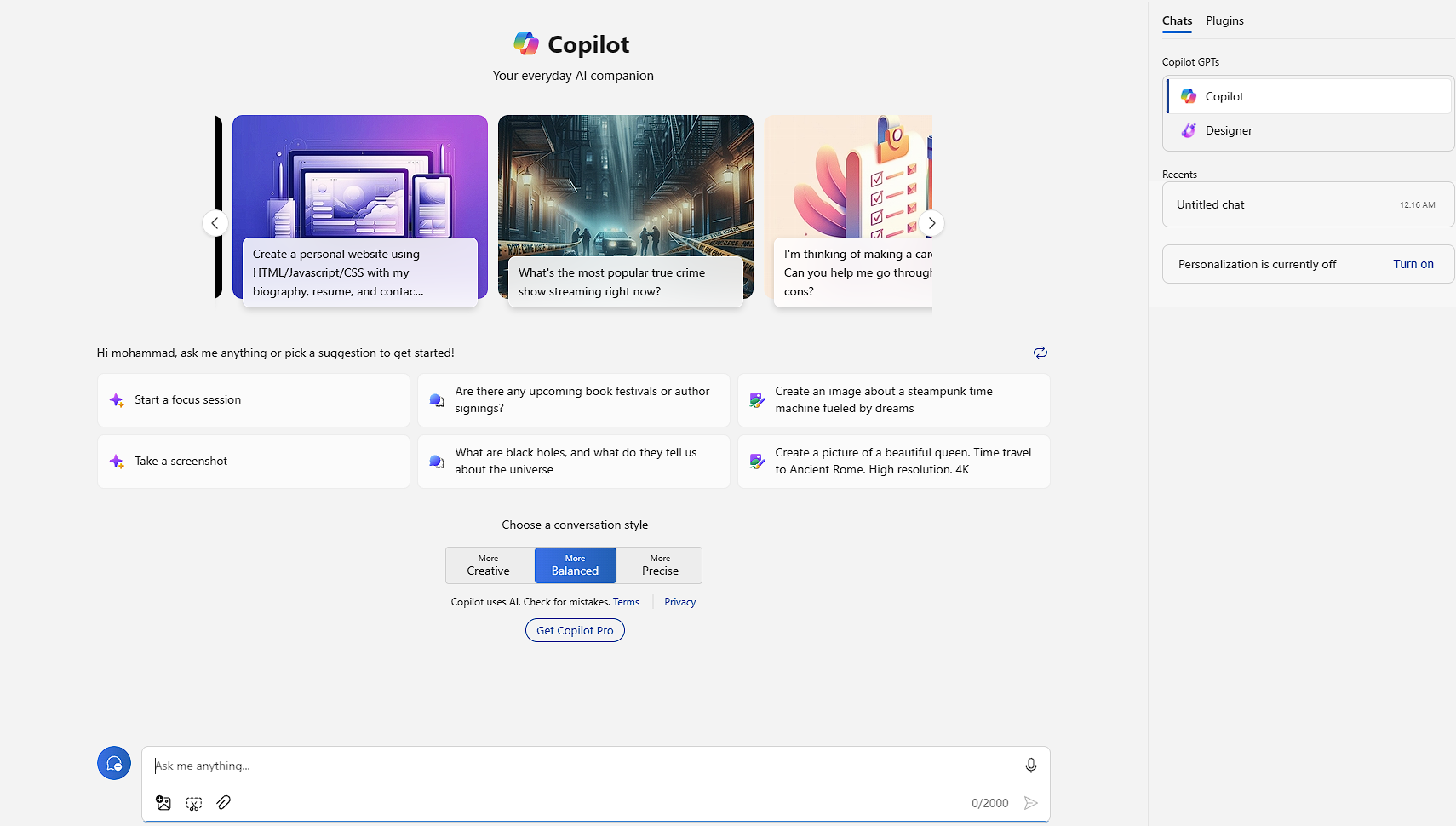

You may have heard about Microsoft’s Copilot generative AI making waves in Windows, Edge, Office apps, and Bing, but do you truly understand what it is? In this blog post, we examine into the intricacies of Copilot, exploring its features, capabilities, availability, pricing, and the technology behind this innovative AI assistant. Stay informed and empowered as we break down everything you need to know about Microsoft’s Copilot.

How Do You Get Copilot and What Can It Do?

Accessing Copilot via Microsoft 365

Copilot can be accessed directly in Microsoft 365 apps, including Word, Excel, Outlook, PowerPoint, OneNote, and Teams (Copilot for Teams requires a business subscription). Users with a paid subscription can utilize Copilot within these applications to generate text and images, ask questions, and receive suggestions. Additionally, businesses that install Microsoft 365 can incorporate their own data into Copilot’s responses, allowing for more personalized and relevant results.

Copilot’s Capabilities and Features

For individuals and small businesses, Copilot Pro, which costs $20 per month, offers faster results and integration with Office apps like Word, Excel, Outlook, and PowerPoint. On the other hand, Copilot for Microsoft 365, priced at $30 per person per month, caters to Business and Enterprise customers, providing tailored answers and suggestions based on company data. Furthermore, Copilot for Sales and Copilot for Service target specific business needs, such as generating sales meeting briefs or enhancing customer engagements in contact centers.

Copilot Website

Navigating the Copilot Website

One of the primary ways to access Microsoft’s Copilot generative AI service is through the Copilot website, copilot.microsoft.com. Here, users can utilize various plug-ins, such as those for OpenTable and Kayak, to enhance their Copilot experience. These plug-ins allow for tasks like booking restaurant reservations and searching travel options, making the Copilot website a versatile platform for AI assistance.

Setting Up an Account

An integral aspect of maximizing the capabilities of Copilot on the website is establishing a user account. It ensures a personalized experience and allows for seamless interaction with the AI assistant. Setting up an account on the Copilot website is straightforward and grants access to a range of features, including the utilization of plug-ins and tailored responses based on user preferences.

It is necessary to create an account to fully utilize Copilot’s functions and access its diverse range of services. By registering with the Copilot website, users can harness the power of AI technology to streamline tasks and enhance productivity in various online activities.

Exploring Online Resources and Support

With the Copilot website’s extensive resources and support features, users can investigate into a wealth of information and assistance. From FAQs to user guides, the platform offers comprehensive support for navigating Copilot’s functionalities effectively. Whether users are new to AI technology or seasoned professionals, the online resources and support tools on the Copilot website cater to a diverse range of needs.

The Copilot website aims to provide users with a seamless and informative experience, allowing them to explore the full potential of Microsoft’s AI assistant. By leveraging the online resources and support available, users can enhance their understanding of Copilot’s capabilities and optimize their usage for increased efficiency and productivity.

Copilot Sidebar in Windows

Installation and Setup

Many users find the Copilot Sidebar in Windows to be a convenient and efficient tool for navigating various functions within their operating system. With a simple installation process, users can easily set up the sidebar to access a range of features and tools.

Basic Functions and Shortcuts

Setup within the Copilot Sidebar in Windows allows users to access a variety of basic functions and shortcuts to enhance their overall user experience. The sidebar provides quick access to changing Windows settings, text summarization, and other handy features, making it a valuable tool for productivity.

Users can customize their user experience by selecting which functions and shortcuts they want to prioritize within the sidebar. This customization allows for a more tailored and efficient workflow, catering to individual preferences and needs.

Customizing the User Experience

Basic customization options within the Copilot Sidebar in Windows enable users to personalize their experience and optimize their workflow. By selecting preferred functions and shortcuts, users can streamline their tasks and improve efficiency.

Understanding how to customize the user experience within the Copilot Sidebar in Windows allows users to tailor their interactions with the AI assistant to suit their specific needs and preferences.

Copilot in Bing Search and the Bing Mobile App

Enhancing Search Results with AI

Search functionality in Bing gets a boost with Copilot’s AI capabilities, allowing users to seamlessly switch between AI-powered and regular search results by simply scrolling. This integration enhances search efficiency and accuracy, making it easier to find the information you need.

Voice-Activated Features and Accessibility

Features like voice input make interacting with Copilot in Bing effortless and convenient, providing users with a hands-free option for searching and generating text. This accessibility feature allows for a more inclusive user experience, catering to a wide range of individuals with varying needs.

Accessibility is a key focus for Copilot in Bing and the Bing Mobile App, ensuring that users can access and utilize the AI assistant’s features with ease, regardless of their physical capabilities or preferences.

Integration Tips for Maximum Efficiency

One way to maximize efficiency when using Copilot in Bing is by taking advantage of integration tips to streamline your workflow. Consider the following tips for seamless integration:

- Customize your Copilot experience by selecting specific plug-ins for enhanced functionality.

- Utilize voice commands for hands-free operation and increased productivity.

- After configuring your settings, explore advanced features such as text summarization and image creation for a more tailored experience.

Integrating Copilot effectively into your Bing search routine can significantly improve your search experience, making information retrieval faster and more intuitive. After setting up your preferences and exploring the available features, you’ll be able to harness the full potential of Copilot in Bing for optimal results.

Copilot Sidebar in the Edge Web Browser

Activating and Customizing the Sidebar

For users of the Edge web browser, the Copilot sidebar provides a convenient way to access AI assistance while browsing the internet. By activating the sidebar, users can easily customize their experience by selecting between regular text interactions with Copilot or utilizing image creation with Microsoft Designer. This feature offers a seamless integration of AI technology within the browsing experience, enhancing productivity and efficiency.

Browsing with AI Assistance

Copilot in the Edge web browser allows users to leverage AI assistance while browsing online. By enabling the sidebar, users can easily access Copilot’s capabilities to generate text and images based on prompts. This feature enhances the browsing experience by providing quick and relevant information, creating a more interactive and dynamic online experience for users.

Syncing Between Devices and Platforms

Edge users can enjoy the convenience of syncing their browsing experience across devices and platforms with the Copilot sidebar. By seamlessly transitioning between different devices, users can access AI assistance and continue their browsing activities without interruption. This synchronization feature enhances the user experience by ensuring continuity and consistency in utilizing Copilot’s capabilities.

Copilot Mobile Apps for Android and iOS

Installation Process for Mobile Devices

An vital aspect of utilizing Copilot on your mobile device is the installation process. Keep in mind that the Copilot mobile apps for Android and iOS are identical and offer the option to choose between GPT-3.5 and GPT-4 AI models with a simple toggle button. Installing the app is straightforward and can be done directly from the respective app stores.

Cross-Functionality with Other Microsoft Apps

Apps The Copilot mobile apps for Android and iOS seamlessly integrate with various Microsoft 365 apps, such as Word, Excel, Outlook, PowerPoint, OneNote, and Teams. This cross-functionality allows for a more productive and connected experience, enabling users to leverage Copilot’s capabilities directly within these popular Microsoft apps. With a paid subscription, businesses can also tap into Copilot for Teams, providing a tailored AI assistant experience within their organization.

Hands-Free Usage and Mobile Productivity

Mobile productivity is further enhanced through the hands-free usage capabilities of Copilot on mobile devices. By incorporating voice input functionality, users can interact with Copilot using their voice, offering a convenient and efficient way to generate text, images, and code on the go. This feature underscores the focus on enhancing productivity in various scenarios, whether you’re on the move or multitasking.

Microsoft 365 Apps

Integrating Copilot with Word, Excel, and PowerPoint

With a paid subscription, users can integrate Copilot directly into Microsoft 365 apps such as Word, Excel, Outlook, PowerPoint, OneNote, and Teams. This integration allows for seamless generation of text, images, and data analysis within the familiar Microsoft Office environment.

Leveraging AI for Data Analysis and Report Generation

Understanding the power of AI in data analysis and report generation, Microsoft 365 users can harness Copilot to analyze large datasets, generate reports, and gain valuable insights quickly and efficiently. With Copilot, tasks that once took hours can now be completed in a fraction of the time.

With Copilot’s AI capabilities integrated into Microsoft 365 apps, users can enhance their workflow efficiency, accuracy, and productivity. Leveraging AI technology for data analysis and report generation streamlines processes and allows for more informed decision-making within organizations.

Collaborative Editing and Artificial Intelligence

One of the key features of Copilot in Microsoft 365 apps is its ability to support collaborative editing through AI-powered assistance. This feature enables teams to work together seamlessly, generating content, analyzing data, and creating presentations with the support of AI technology.

Excel, along with other Microsoft 365 apps, can benefit from Copilot’s collaborative editing features, enhancing teamwork and productivity within organizations. By leveraging AI for collaborative editing, teams can streamline their workflows and achieve better results in less time.

Is Copilot Free?

Understanding the Free Version’s Limitations

Many users appreciate the convenience of Microsoft’s Copilot AI being free to use in various forms. However, the free version does come with some limitations. Users should be aware that while Copilot is available in select regions and supports several languages, it may not offer all the features and capabilities of the paid versions. Additionally, users should exercise caution when inputting private information, as the free version may use interactions for training purposes.

Comparing Features: Free vs. Premium

Comparing the features of the free version of Copilot with the premium versions can help users decide whether to invest in a subscription. Below is a breakdown of the key differences:

| Free Version | Premium Version |

| Available in select regions and languages | Expanded language and region support |

| May not include all features | Access to additional tools and capabilities |

| Usage of private information for training | Enhanced privacy and data protection |

When considering whether to upgrade to a premium version of Copilot, users should weigh the additional features and benefits against the cost of the subscription. The premium versions offer faster results, integration with Microsoft 365 apps, and the ability to generate responses based on company data, making them ideal for businesses and enterprises looking to enhance their productivity and efficiency.

How Much Does Copilot Pro Cost?

Pricing Structure

To access the full features and capabilities of Copilot, users can opt for the Copilot Pro subscription. For individuals and small businesses, the Copilot Pro subscription is priced at $20 per month. This tier offers faster results and integration with Office apps such as Word, Excel, Outlook, and PowerPoint. For larger organizations utilizing Microsoft 365, a Copilot for Microsoft 365 subscription is available at $30 per person per month, allowing for tailored solutions based on company data.

Evaluating Cost-Effectiveness for Businesses

Understanding the cost-effectiveness of employing Copilot within a business setting is crucial. With Copilot for businesses, the ability to generate sales meeting briefs, emails, and leverage CRM data provides a streamlined approach to customer engagements. The Copilot for Service option, tailored for contact centers, ensures efficient interactions by utilizing relevant company and customer data, ultimately enhancing operational efficiency.

What Technology Is Behind Copilot?

Delving into Microsoft’s AI and Machine Learning

With the rise of artificial intelligence and machine learning, Microsoft has leveraged cutting-edge technology to power its Copilot AI assistant. Behind the scenes, Copilot relies on leading generative AI tools from OpenAI, utilizing models such as ChatGPT-4 and DALL-E 3. These models are supported by Microsoft’s Natural Language Processing, Text to Speech technology, and Azure cloud services to enhance the user experience.

The Role of Large Language Models in Copilot

The foundation of Copilot’s functionality lies in the utilization of large language models, particularly ChatGPT-4, which boasts a trillion parameters for formulating responses. These large language models play a crucial role in understanding user queries, generating text, and providing accurate results. Coupled with Retrieval Augmentation Generation (RAG) technology, Copilot is able to ground responses in context and deliver relevant information to users.

What Makes Copilot Different From Other Generative AI Chatbots?

Unique Features of Copilot

One of the key features that set Copilot apart from other Chatbots is its ability to utilize voice input for interactions alongside text. Additionally, Copilot allows users to upload images for certain tasks, a feature not commonly found in other AI services. Users also have the freedom to choose the style of response from More Creative, More Balanced, or More Precise options. Moreover, Copilot provides prominent links to its information sources within the conversation interface.

Comparative Analysis with Competitors

| Features | Copilot |

| Voice Input | Available |

| Image Upload | Supported |

Other generative AI chatbots like Google Gemini and ChatGPT may lack the voice input feature and image upload compatibility that Copilot offers. By providing users with a choice of styles for responses, Copilot proves to be more versatile in meeting individual preferences. Additionally, Copilot’s integration of source links within the chat distinguishes it from other AI services, ensuring transparency and credibility.

Privacy and Copyright Issues With Copilot

Data Handling and Privacy Concerns

One concern with AI assistants like Copilot is how user data is handled and stored. Microsoft has outlined responsible AI standards to ensure transparency, fairness, and privacy for users. They conduct impact assessments and evaluations to mitigate potential risks. Additionally, commercial data protection is in place for work and school accounts, ensuring that customer data is not used to train the AI and that Microsoft does not have access to their Copilot interactions.

Navigating Intellectual Property with AI-Generated Content

Issues surrounding intellectual property rights arise when using AI-generated content like Copilot. Users must review, adapt, and attribute any generated content they use publicly. Microsoft’s Copilot Copyright Commitment policy protects paying customers in copyright infringement cases. Work and school accounts are also safeguarded by Commercial Data Protection, ensuring that Microsoft does not use customer data to train the AI and does not have access to their Copilot interactions.

Copilot Is a Moving Target, and It’s Not Perfect

Updates and Iterative Improvements

Perfecting an AI assistant like Copilot is an ongoing process. Microsoft is constantly working on updates and iterative improvements to enhance its functionality and performance. With the rapid advancements in generative AI technology, Copilot continues to evolve to better understand user queries and generate relevant responses efficiently. Stay tuned for the latest updates on how Copilot is adapting to meet user needs.

Known Limitations and Common User Complaints

Copilot, like any AI tool, has its limitations and may not always produce perfect results. Users have reported instances where Copilot’s responses were not entirely accurate or where it took longer to generate desired content. However, Microsoft is actively addressing these common complaints and working to enhance Copilot’s accuracy and efficiency. This ongoing feedback loop helps improve the overall user experience with the AI assistant.

Leveraging Copilot for Business and Education

Copilot in Professional Environments

Professional environments can benefit greatly from Microsoft Copilot’s AI assistance. With features such as generating text, summarizing information, creating images, and even writing code in various languages like JavaScript and Python, Copilot can streamline tasks and boost productivity in workplaces. Additionally, businesses that install Microsoft 365 can integrate Copilot with their data, allowing for tailored responses based on internal information. This ensures that Copilot can assist in handling specific business needs efficiently.

AI-Assisted Learning and Research

Copilot’s AI capabilities are not limited to professional environments but extend to educational settings as well. For instance, students and researchers can use Copilot to generate text for essays, summarize complex information, and even assist in coding projects. Moreover, the AI’s ability to provide relevant and accurate information can enhance the learning and research experience, helping individuals in these fields achieve better results in a more efficient manner.

Technical Support and Customer Service

Accessing Help Resources

With Microsoft’s Copilot, accessing help resources is imperative for resolving technical issues or getting answers to your questions. The AI assistant provides a range of support options to assist users in maximizing their experience with the service. Whether you encounter difficulties in using the platform or need guidance on specific features, tapping into these resources can help streamline your interactions with Copilot.

Community Support and User Forums

Technical assistance for Copilot can also be sought through community support and user forums, where users can exchange insights, troubleshooting tips, and best practices. Engaging with fellow users and experts in the field can offer valuable perspectives and solutions to common challenges. These forums serve as a hub for sharing knowledge and fostering a collaborative environment for enhancing your Copilot experience.

Additionally, actively participating in user forums can lead to networking opportunities and a deeper understanding of the capabilities of Copilot, enabling users to leverage the AI assistant more effectively in their workflows. By tapping into the collective expertise of the community, users can glean valuable insights and stay abreast of the latest developments in generative AI technology.

Future Developments and Roadmap

Upcoming Features and Updates

Not only is Microsoft’s Copilot generative AI already making waves in various Microsoft applications, but the future holds even more exciting developments. With the rapid evolution of technology, users can expect new features and updates to enhance their AI experience.

Microsoft’s Vision for AI in the Digital Workplace

Vision: Microsoft has a clear vision for the integration of AI in the digital workplace, aiming to revolutionize how businesses operate and employees collaborate. With Copilot at the forefront, Microsoft envisions a future where AI seamlessly assists in various tasks, from generating text and images to providing valuable insights based on real-time data.

Updates: As Microsoft continues to refine Copilot and explore new AI capabilities, users can anticipate a more intuitive and efficient digital workspace experience. With ongoing updates and advancements, Copilot is set to play a pivotal role in enhancing productivity and innovation in the digital workplace.

User Reviews and Testimonials

Exploring User Experiences with Copilot

For a deeper understanding of user experiences with Copilot, it’s vital to explore how individuals are interacting with this innovative AI assistant. Users have reported positive experiences with the conversational chat interface, noting its ability to generate text, images, and even write code in various computer languages. The convenience of being able to ask Copilot specific questions and get immediate responses has been a highlight for many users.

Case Studies of Success Stories

For a more in-depth look at the impact Copilot has had on users, let’s explore into some success stories that showcase the effectiveness of this AI assistant. Here are a few notable case studies:

- Success Story 1: A business saw a 30% increase in efficiency after implementing Copilot for Sales, generating personalized sales meeting briefs and emails based on CRM data.

- Success Story 2: An individual reported a 40% reduction in time spent on administrative tasks by using Copilot Pro in Microsoft 365 apps for document summarization and text generation.

- Success Story 3: A team found that Copilot for Service helped streamline customer engagements, resulting in a 25% increase in customer satisfaction ratings.

Copilot Integration with Third-Party Apps

Expanding Copilot’s Capabilities

Apps play a crucial role in expanding Copilot’s capabilities by integrating with third-party platforms and services. This integration allows users to seamlessly access a wide range of functionalities through Copilot, enhancing productivity and efficiency. By connecting with various apps, Copilot becomes a versatile assistant capable of performing diverse tasks tailored to individual needs.

Custom Integrations and API Access

Apps can leverage custom integrations and API access to tailor Copilot’s functionalities to specific requirements. This level of customization allows businesses and developers to create unique solutions that align with their workflows and processes. By integrating Copilot with their existing systems and applications, organizations can streamline operations and enhance overall performance.

Copilots can benefit from custom integrations and API access by creating bespoke solutions that cater to their unique needs. By tapping into Copilot’s capabilities through custom APIs, businesses can optimize their workflows, automate tasks, and improve overall efficiency. This level of integration empowers Copilots to harness the full potential of AI technology to drive innovation and success.

Conclusion

The advancement of AI technology has brought about Microsoft’s Copilot, an innovative AI assistant that offers a wide range of functions across various platforms. From generating text and images to providing coding help and personalized responses, Copilot showcases the potential of generative AI. While still evolving, Copilot’s integration across Windows, Edge, Office apps, and Bing demonstrates Microsoft’s commitment to harnessing AI for everyday tasks and productivity. As with any AI technology, Copilot has its strengths and limitations, but its ongoing development and adaptability in response to user feedback indicate a promising future for AI assistants in enhancing user experiences and efficiency.

Tech

Apple Ready to ‘Let Loose,’ Finally Introduce New iPads on May 7

Many Apple fans are eagerly awaiting the company’s upcoming special event, titled Let Loose, scheduled for May 7. This online-only reveal is set to showcase new Apple hardware, with a particular focus on the much-anticipated introduction of new iPads.

While Apple has not provided specific details about what will be unveiled at the event, hints from the announcement artwork suggest that a third-generation Apple Pencil may be in the works. This new stylus is rumored to be designed exclusively for the iPad Pro, featuring magnetized tips for easy switching and potential integration with the Find My network.

In addition to the new Apple Pencil, reports and rumors indicate that Apple will be refreshing its iPad lineup, which did not see any new releases in 2023. Speculations suggest upgrades across the board, with potential improvements such as FaceTime cameras for the iPad Pro series, OLED displays for the Pro models, and a thinner chassis to align with modern tablet specifications.

Renowned Apple insider Mark Gurman hinted at the upcoming iPad event with a concise tweet, further fueling excitement among enthusiasts. Alongside the anticipated enhancements, there are expectations for MagSafe charging to make its way to the iPad, potentially requiring new chargers with higher-speed capabilities.

For the iPad Air, a larger display offering, possibly at 12.9 inches, and a new processor upgrade are likely to be part of the updates. Although the specifics remain uncertain until the Let Loose event, there is hope for a more sensible camera position and the possibility of multiple ports on some models.

As the Let Loose event approaches, Apple enthusiasts can look forward to the official confirmation of these speculations and a closer look at the new hardware offerings. While the reveal will be online-only, the excitement of discovering the latest innovations in Apple’s lineup remains palpable among fans and industry observers alike.

So mark your calendars for May 7 at 7 a.m. PT/10 a.m. ET and tune in live at apple.com/apple-events to witness Apple ‘Let Loose’ its latest creations, potentially setting a new standard for tablets and stylus technology in the market.

Tech

Emad Mostaque Steps Down as CEO of Stability AI

Emad Mostaque, the CEO of Stability AI, has decided to step down from his role at the startup that brought Stable Diffusion to life. Mostaque’s departure comes as Stability AI shifts its focus towards decentralized AI, a move that signals a new chapter in the ever-evolving artificial intelligence industry.

In a press release issued late on Friday night, Stability AI announced Mostaque’s decision to leave the company in order to pursue decentralized AI initiatives. Mostaque will also be stepping down from his position on the board of directors at Stability AI. This move paves the way for a fresh direction at the company, as they search for a new CEO to lead them into the next phase of growth and innovation.

The board of directors has appointed two interim co-CEOs, Shan Shan Wong and Christian Laforte, to oversee the operations of Stability AI while a search for a permanent CEO is conducted. Jim O’Shaughnessy, chairman of the board, expressed confidence in the abilities of Wong and Laforte to navigate the company through its development and commercialization of generative AI products.

Mostaque’s departure from Stability AI follows recent reports of turmoil within the AI startup landscape. Forbes had reported on Stability AI’s challenges after key developers resigned, including three of the five researchers behind the technology of Stable Diffusion. Additionally, rival startup Inflection AI experienced significant changes with former Google DeepMind co-founder Mustafa Suleyman joining Microsoft, leading to a talent acquisition by the tech giant.

Stability AI’s flagship product, Stable Diffusion, has garnered widespread use for text-to-image generation AI tools. The company recently introduced a new model, Stable Cascade, and began offering a paid membership for commercial use of its AI models. However, legal challenges around the data training of Stable Diffusion, highlighted by a pending lawsuit from Getty Images in the UK, have added complexity to the company’s operations.

As the AI industry continues to evolve and face challenges, Emad Mostaque’s decision to step down as CEO of Stability AI represents a significant shift towards decentralized AI models. This move sets the stage for a new era of innovation and exploration in the field of artificial intelligence, as companies strive to find the balance between commercialization and openness in developing advanced AI technologies.

-

Business2 years ago

How to Earn Money Writing Blog Posts in 2023: A Comprehensive Guide

-

Games2 years ago

How does Dead Space Remake enhance the Horror Classic of 2008

-

Video2 years ago

Everything you need to know about Starfield

-

Health2 years ago

How is Yoga and Pilates Bridging the Gap Between your Mind and Body

-

Health2 years ago

Migraine medications significantly improve the quality of life

-

World2 years ago

Swiss Pharma Powerhouse Acino Expands into Latin America with M8 Pharmaceuticals Acquisition

-

Self Improvement2 years ago

Enhancing Relationships and Emotional Intelligence Through Mindfulness Meditation

-

Business2 years ago

How to Use LinkedIn to Build Your Professional Brand